If you use a passphrase to control access to your computer, as you probably should, then it has no doubt become second nature to type it quickly when you sit down to get to work. If you’ve set an aggressive lock-screen timeout, as you probably also should, then you have become blazingly efficient at typing this password. Perhaps too blazing, perhaps too efficient.

If this sounds like you so far, perhaps I can complete the picture by describing the heart-stopping horror of sitting down to your computer after a short time away, methodically typing your password in to unlock it, only to realize the computer wasn’t locked at all, and you just typed it into a chat window, or worse, posted it to Twitter?

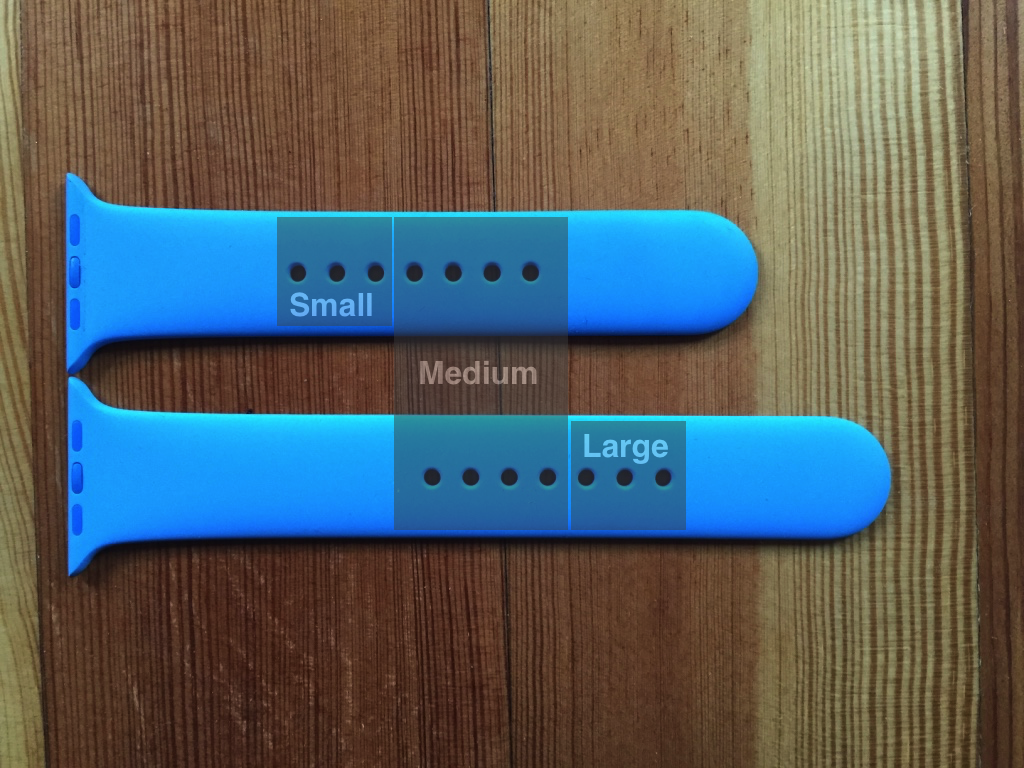

I set out recently to address this problem on my computer by writing my own nefarious little tool, which would act as a global keystroke sniffer, looking for any indication that I am typing my password, at which point it puts up a helpful reminder:

The beauty of this tool is it catches me at the moment I type my password (actually just a prefix of it, but that’s a technicality), and by nature of putting up a modal dialog that jumps in my face, absorbs any muscle-memory-driven effort to complete the password and press return in whatever insecure text field I might have been typing into.

You may wonder whether this prevents the legitimate entry of my password, e.g. into fields such as the system presents when asking me to confirm an administrator task? The answer is no, because part of the beauty of those standardized password fields is that Apple has taken care to enable a secure keyboard entry mode while these fields is active. While a standard password field is focused, none of your typing is (trivially) available to other processes on the system. So my tool, along with any other keyboard loggers that may be installed on the system, are at least prevented from seeing passwords being typed.

I’ve been running my tool for a few weeks, confident in the knowledge that it will prevent me from accidentally typing my password into a public place. But its aggressive nature has also revealed to me a couple areas that I expected to be secure, but which are not.

Insecure Input Fields

The first insecure input area I noticed was the Terminal. As a power-user, it is not terribly uncommon for me to invoke super-user powers in order to e.g. clean up a system-owned cache folder, install additional system packages, kill system-owned processes that are flying out of control, or simply poke around at parts of the system that are normally off-limits. For example, sometimes I edit the system hosts file to force a specific hostname to map to an artificial IP address:

sudo vi /etc/hosts

Password:•

The nice “•” is new to Yosemite, I believe. Previously tools such as sudo just blocked typing, leaving a blank space. But in Yosemite I notice the same “secure style” bullet is displayed in both sudo and ssh, when prompting for a password. To me this implies a sense of enhanced security: clearly, the Terminal knows that I am inputting a password here, so I would assume it applies the same care that the rest of the system does when I’m entering text into a secure field. But it doesn’t. When I type my password to sudo something in the Terminal, my little utility barks at me. There’s no way around it: it saw me typing my password. I confirmed that it sees my typing when entering an ssh password, as well.

The other app I noticed a problem with is Apple’s own Screen Sharing app. While logged in to another Mac on my network, I happened to want to connect back, via AppleShare, to the Mac I was connecting from. To do this, I had to authenticate and enter my password. Zing! Up comes my utility, warning me of the transgression. Just because the remote system is securely accepting my virtual keystrokes, doesn’t mean the local system is doing anything special with them!

What Should You Do?

If you do type sensitive passwords into Terminal or Screen Sharing, what should you do to limit your exposure? Terminal in particular makes it easy to enable the same secure keyboard entry mode that standard password fields employ, but to leave it active the entire time you are in Terminal. To activate this, just choose Terminal -> Secure Keyboard Entry. I have confirmed that when this option is checked, my tool is not able to see the typing of passwords.

Why doesn’t Apple enable this option in Terminal by default? The main drawback here is that my tool, or other tools like it, can’t see any of your typing. This sounds like a good thing, except if you take advantage of very handy utilities such as TextExpander, which rely upon having respectful, trusted access to the content of your typing in order to provide a real value. Furthermore, if you rely upon assistive software such as VoiceOver, enabling Secure Keyboard Entry could impact the functionality of that software. In short: turning on secure mode shuts down a broad variety of software solutions that may very well be beneficial to users.

As for Screen Sharing, I’m not sure there is anyway to protect your typing while using it. As a “raw portal” to another machine, it knows nothing about the context of what you’re doing, so as far as it’s concerned your typing into a password field on the other machine is no different from typing into a word processor. Unfortunately, Screen Sharing does not offer a similar option to Terminal’s application-wide “Secure Keyboard Entry.”

What Should Apple Do?

Call me an idealist, but every time that tell-tale • appears in Terminal, the system should be protecting my keystrokes from snooping processes. I don’t know the specifics of how or why for example both ssh and sudo receive the same treatment at the command-line, but I suspect it has to do with them using a standard UNIX mechanism for requesting passwords, such as the function “getpass()” or “pam_prompt()”. Knowing little about the infrastructure here, I’m not going to argue that it’s trivial for Apple to make this work as expected, but being in charge of all the moving parts, they should make it a priority to handle this sensitive data as common sense would dictate.

For Screen Sharing, I would argue that Apple should offer a similar option to Terminal’s “Secure Keyboard Entry” mode, except that perhaps with Screen Sharing, it should be enabled by default. The sense of separation and abstraction from the “current machine” is so great with Screen Sharing, that I’m not sure it’s valuable or expected that keyboard events should be intercepted by processes running on the local machine.

What Should Other Developers Do?

Apple makes a big deal in a technical note about secure input, that developers should “use secure input fairly.” By this they mean to stress that any developer who opts to enable secure input mode (the way Terminal does) should do so in a limited fashion and be very conscientious that it be turned back off again when it’s no longer needed. This means that ideally it should be disabled within the developer’s own app except for those moments when e.g. a password is being entered, and that it should absolutely be enabled again when another app is taking control of the user’s typing focus.

Despite the strong language from Apple, it makes sense to me that some applications should nonetheless take a stronger stance in enabling secure input mode when it makes sense for the app. For example, I think other screen sharing apps such as Screens should probably offer a similar (possibly on by default) option to secure all typing to an open session. I would see a similar argument for virtualization software such as VMware Fusion. It’s arguable that virtualized environments tend to contain less secure data, but it seems dangerous to make that assumption, and I think it does not serve the user’s expectations for security that whole classes of application permit what appears to be secure typing (e.g. in a secure field in the host operating system) that is nonetheless visible to processes running on the system that is running the virtualization.

What Should I Do?

Well, apart from writing this friendly notice to let you know what you’re all up against, I should certainly file at least two bugs. And I have:

- Radar #19189911 – “Standard” password input in the Terminal should activate secure input

- Radar #19189946 – Screen Sharing should offer support for securing keyboard input

Hopefully the information I have shared here helps you to have a better understanding of the exposure Terminal, Screen Sharing, and other apps may be subjecting you to with respect to what you might have assumed was secure keyboard input.